Evolution of Processor Technologies

Processors have come a long way, largely because folks are always on the hunt for bigger, better, and faster tech. Video games and a slew of other mind-blowing innovations have really put the pedal to the metal when it comes to processor advancements.

Impact of Video Games

If you think about gaming, it’s not just about escaping reality and blasting aliens; it’s a whole tech revolution behind the scenes. Gamers are thirsty for spine-tingling, jaw-dropping experiences, which has pushed manufacturers to ramp up their specs big time. We’re talking souped-up graphics, lightning-fast processors, and serious storage capabilities in everything from consoles to PCs and mobiles.

Take a minute and give video-game-driven tech like virtual reality (VR), augmented reality (AR), and motion control a nod. These aren’t just playthings, they’re tech tools reshaping sectors across the board. Fields like healthcare and engineering have sneakily borrowed complex algorithms and AI systems, originally crafted for gaming sprees, for their own cutting-edge tasks.

The concept of gamification has gone viral. What started as a gaming strategy now spices up otherwise mundane stuff like office training, exercise routines, and online learning—adding a little fun to the grind. Meanwhile, medical training, military strategies, and even disaster prep have reaped the benefits using game tech tricks for virtual simulations and serious games to train folks safely.

Cutting-Edge Innovations

The tech under the hood of processors is evolving faster than you can say “megahertz.” Two game-changers? Multi-core processors and AI, which have sent shockwaves through computing capabilities.

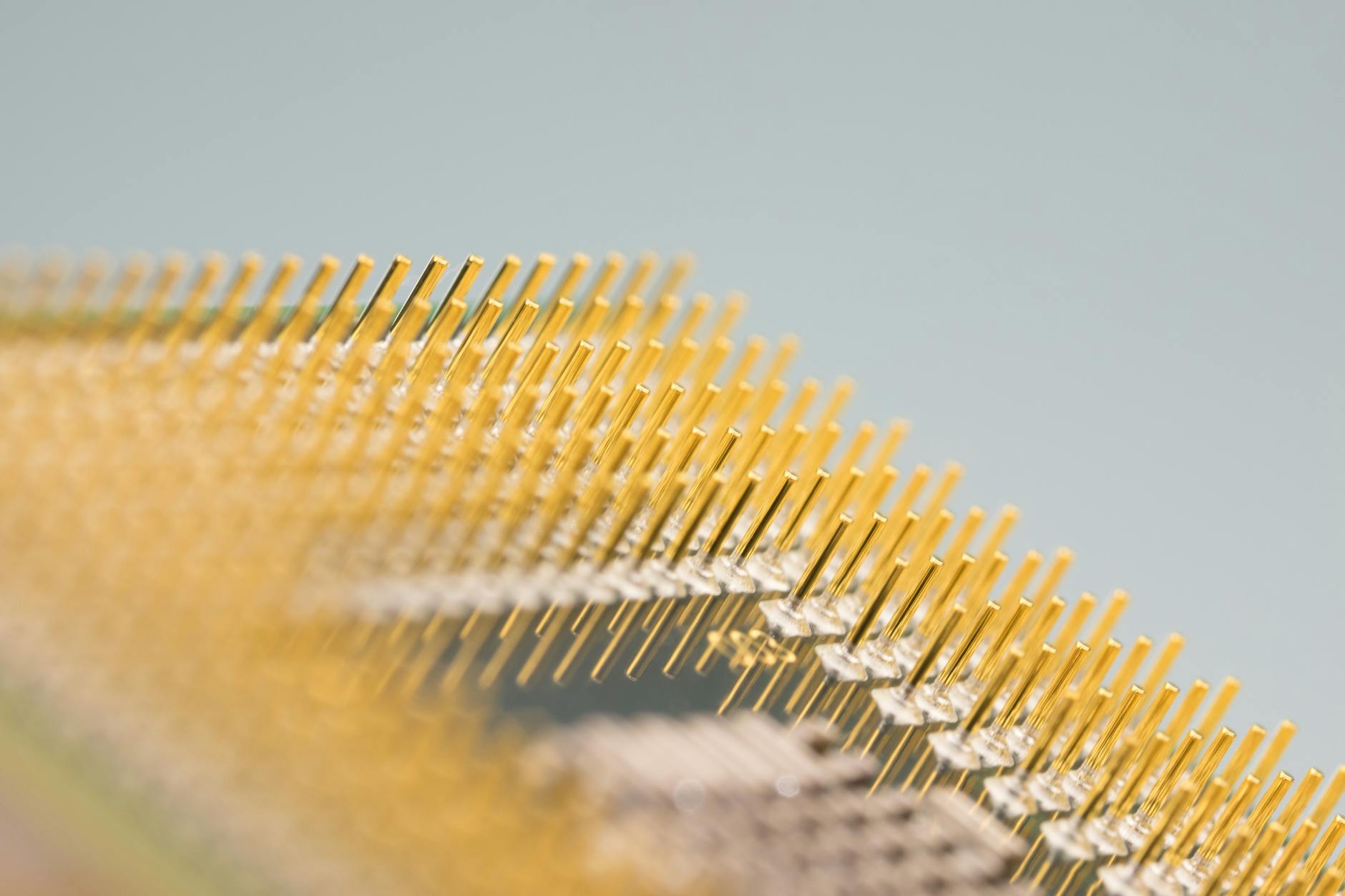

Multi-core processors grabbed pole position by making parallel processing the norm. This boosts efficiency and performance, putting consumers and enterprise equipment on the same speedy footing. Then there’s the micro-wonders of nanotechnology. Think Feelit’s tiny tech marvels in healthcare and predictive tech magic.

And don’t get started on AI. It’s like giving processors a master’s degree in multitasking. With things like Phiar’s spatial AI and Neuron Soundware’s predictive skills, processors are tackling super complex stuff with style and smarts.

Here’s a little trip down memory lane with a table nodding to the leaps in processor generations over time:

| Processor Generation | Year Introduced | Clock Speed (GHz) | Number of Cores |

|---|---|---|---|

| Intel 80386 | 1985 | 0.016 – 0.033 | 1 |

| Pentium 4 | 2000 | 1.3 – 3.8 | 1 |

| Core i7 | 2008 | 1.6 – 3.33 | 4 |

| Ryzen 9 5950X | 2020 | 3.4 – 4.9 | 16 |

Looking back, it’s wild to see how tech jumped from modest, single-core chips to the multi-core, performance beasts we have today. With the pace we’re setting, the future promises processors that will knock our socks off.

Cool Stuff from the AI World

In recent times, AI has sparked off some pretty amazing stuff in different areas, especially in the tech that powers our electronics. Let’s break down some of the coolest AI innovations that are changing things up big time.

Feelit’s Smart Stickers

Feelit’s Smart Stickers are no ordinary stickers. They’re packed with nano-sensing wizardry and team up with a wireless gadget to spot even tiny changes in structures. This means factories can run smoother because these stickers offer super detailed insights.

| Tech | What’s It Got? | Why It’s Cool |

|---|---|---|

| Smart Stickers | High-tech nano-sensing, wireless, ultra-detailed | Boosts how well things run, awesome at keeping tabs on structures |

Wave “hi” to better productivity with these sticker sensors! Omdena

Phiar’s Fancy Car Magic

Phiar’s tech makes cars a lot smarter. With their Spatial AI Engine, your car can now understand the world around it way better, thanks to flashy AR (Augmented Reality) tools. No more squinting at street signs!

| Tech | Features | Why You’ll Love It |

|---|---|---|

| Spatial AI Engine | Next-level observation, AR navigation | Navigate like a boss, know what’s going on around you |

See the road differently with Phiar’s new visual magic trick! Omdena

Neuron’s Sound Genius

Neuron Soundware brings sound-smarts to the maintenance world. Detect machinery issues by just listening in, long before they cause any trouble. Fewer breakdowns mean more savings.

| Tech | Features | Why It Rocks |

|---|---|---|

| Sound Recognition | AI listening skills, heads-up on problems | Cuts down costs, keeps everything humming smoothly |

By catching the earliest signs of trouble, Neuron saves the day (and your wallet). Omdena

Moon’s Robotic Surgery Magic

Moon Surgical is making the scalpel a bit jealous. By mixing clever vision tools with top-notch robotic hands, they’re making surgeries safer and faster. Bulletproof trials prove it benefits everyone in the operating room.

| Tech | Features | Perks |

|---|---|---|

| Robotic Surgery Tools | Vision systems, robotic precision | Spot-on surgery, happy docs and patients |

Experience the future of surgery with Moon’s robo-wonders. Omdena

Falkonry’s Plant Whisperer

Falkonry is the sneaky brain that helps factories work better. This platform spots trouble and advises on fixes fast before anything major happens, which means everything’s running like clockwork without any unwanted halts.

| Tech | Features | Why It’s a Must |

|---|---|---|

| Decision Platform | AI brainpower, fast solutions | Smooth operations, less downtime |

Keep the factory floor in check with Falkonry’s smart advice. Omdena

These nifty AI innovations are shaking things up in tech land, sparking changes across loads of industries and making room for even bigger breakthroughs.

Advancements in Quantum Computing

The Power of Qubits

Quantum computing? It’s like regular computing on steroids, turbo-charged with qubits! Instead of just sticking to boring old ones and zeros, qubits get fancy with quantum mechanics, juggling multiple states at once. This means quantum computers are packing way more punch than your granddad’s old PC ever could.

So, what’s all the fuss about? Well, imagine having a computer that blows past 5,000 qubits! That’s like strapping boosters onto your calculations. It’s a game-changer for tackling those tangled-up problems that make classical computers throw in the towel (SmithySoft).

| Quantum Device | Qubit Count | Synaptic Operations per Second |

|---|---|---|

| Standard Quantum Computer | 5,000+ | Who knows? |

| Neuromorphic Supercomputer (DeepSouth) | Mysterious | 228 trillion |

Quantum gizmos aren’t just for showing off—they’re super handy in spots where you need big brainpower, like cracking codes and exploring the stars (Internxt Blog). Talking about codes, those sneaky quantum machines could bust open today’s encryption, so folks are busy coming up with new tricks to keep secrets safe.

And then there’s the curious case of the neuromorphic supercomputers such as DeepSouth. Think of it like getting a machine to channel its inner Einstein—228 trillion synaptic operations per second, folks! It’s like having a thousand brainstorms at once (SmithySoft).

Quantum computing is running at full throttle, ready to flip how we solve stuff upside down. This isn’t science fiction anymore; it’s happening fast and could shake up just about everything. Who knew playing with qubits could drag us all into the future so quickly?

Edge AI and its Applications

Edge AI is shaking things up by bringing data crunching magic straight to the gadgets where data first pops up. Here, you’ll get the lowdown on what makes Edge AI tick, its explosive market boom, and the hardware wizards that pull the strings.

Edge AI Technology Overview

Edge AI isn’t about some sci-fi extravaganza but rather the down-to-earth deployment of AI magic on devices chilling at the edge of the net. Think of it as a savvy local chef whipping up a meal without needing to call the fancy downtown restaurant. This tech shines bright where snap decisions are a must, like with self-driving cars, super-smart cameras, and those snazzy industrial systems that keep the wheels turning.

Why is Edge AI rocking the show? It’s all about slicing that wait time down. By letting devices handle their data biz, they can pop out answers pronto. Plus, it’s a bandwidth saver, keeping the chatter over networks to a minimum and giving centralized data hubs a break. Got a thing for eye-boggling video analytics? Edge AI’s got your back with its slick Convolutional Neural Networks (CNNs) put to work on the scene (Xailient).

Growth of Edge AI Software Market

The global Edge AI market isn’t just growing; it’s sprouting wings. As stated by Xailient, it’s set to zoom from a humble $590 million in 2020 to a whopping $1.83 trillion by 2026. What’s blowing up the numbers?

- More work’s heading up the cloud.

- Smart gadgets are popping up everywhere.

- AI and its nerdy algorithms are seeing a growth spurt.

- 5G is rolling out, bringing a speed boost like never before.

| Year | Market Size (USD) |

|---|---|

| 2020 | 590 million |

| 2021 | 740 million |

| 2022 | 1.01 billion |

| 2023 | 1.36 billion |

| 2024 | 1.65 billion |

| 2025 | 1.51 trillion |

| 2026 | 1.83 trillion |

Hardware Acceleration in Edge AI

Edge AI is hungry for power—computing power, that is—and hardware acceleration is its buffet. Chips like Graphics Processing Units (GPUs), Field Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs) come into play to juice up Edge AI gadgets. These muscle engines are all about handling the nitty-gritty of deep learning models.

- GPUs: Love chewing through parallel tasks, these guys are just right for deep learning gigs.

- FPGAs: Known for their adaptiveness and can be retooled for a smorgasbord of tasks, balancing oomph and resourcefulness.

- ASICs: Built with a singular focus, these are task-specific dynamos offering unmatched efficiency for their designated roles.

Thanks to these tech wonders, running machine learning on the device gets a speed boost, while conserving bandwidth too. With savvy tech parked right on the edge, we’re ushering in a future ripe with smarter apps and analytics (Xailient).

Emerging Technologies in Information Processing

As we all know, our hunger for quick and dependable data processing is always on the rise, sparking fresh innovations to tackle this ever-growing appetite. Two budding talents in this arena are synthetic data for AI and the marvel known as TinyML.

Synthetic Data for AI Algorithms

Imagine a world where you could skip the moral juggling act of data privacy woes. Enter synthetic data – the knight in not-so-shiny armor. It’s all about creating fake—but useful—data to train AI where real data just won’t cut it. Bye-bye, sensitive info nightmares! With synthetic data sliding in, getting your AI project off the ground becomes a breeze, cutting both time and red tape (Xailient).

Benefits of Synthetic Data

- Privacy Protection: No real people’s details mean sleeping better at night.

- Scalability: Whip up a big pile of data without running around collecting it.

- Versatility: Flexible enough to fit into any situation or scenario.

| Aspect | Real Data | Synthetic Data |

|---|---|---|

| Privacy Risks | High | Low |

| Collection Time | Long | Short |

| Cost | High | Low |

| Versatility | Limited | High |

The Rise of TinyML

Now, let’s chat about TinyML, where small machines perform big magic. It’s all about teaching machines to think on their own without burning through power, making it perfect for gadgets running on just a couple of AA batteries. This is the edge of innovation that lets devices be always watchful and quick to respond (Xailient).

Applications of TinyML

- Wearables: Watch your heartbeat with gizmos that update faster than your pulse.

- Environmental Monitoring: Little spies in the wild tracking the weather and more without nagging you for a recharge.

- Smart Home Devices: Gear that hops to action thanks to its quick brainwork.

| Use Case | Benefit |

|---|---|

| Wearables | Real-time health monitoring |

| Environmental Sensors | Long battery life |

| Smart Home Devices | Immediate response |

With these innovations, tech enthusiasts can take a big leap into the future, enhancing how data is processed while keeping it sleek and safe.

The Future of Cloud Computing

Industry Growth Predictions

Cloud computing keeps shaking up tech in a big way, and it’s not slowing down anytime soon. New processor tech is adding to the mix by boosting processing speed and making things run smoother.

Industry pros are betting on the cloud market soaring to a jaw-dropping $2432.87 billion by 2030, with internet-based services offering affordable solutions. Heavy hitters like Amazon Web Services, Google Cloud, and Microsoft Azure are always coming up with fresh ideas to keep up with the increasing need.

Cloud computing is poised to be a game changer, with businesses jumping on the bandwagon. Going beyond just a tool, it’s expected to spark major business innovation (LinkedIn).

| Company | Market Share (%) |

|---|---|

| Amazon Web Services | 33% |

| Microsoft Azure | 22% |

| Google Cloud | 9% |

The growth surge in cloud computing is backed by advancements in processor technologies, bringing speed and reliability to cloud services. Smarter computation means better data handling, storage solutions, and smooth app delivery.

By getting on board with these tech jumps, businesses should anticipate more secure and efficient cloud operations, pushing both tech strides and business innovation ahead.

Cloud computing’s future isn’t just about running apps; it’s about paving new paths for discovery and growth across different fields.

Key Trends in Robotics

Robotics is bustling with action these days, mixing new processor tech to make some jaw-dropping gadgets. Let’s check out a couple of trends that are tweaking how robots are built and what they can pull off.

Integration of AI and Machine Learning

AI and machine learning are spearheading the robot revolution. These tech wizards let robots soak up knowledge from their surroundings, tackle various real-world tasks, and work like a boss with remarkable accuracy. Thanks to AI and ML, we’ve got some snazzy robots like “Ameca” and “Sophia” running around, showing off human-like chats and clever tricks (SmithySoft).

AI and ML Perks in Robotics:

- Sharper Senses: Robots get a better grip on the world around them.

- Quick Thinkers: AI crunches data fast, helping with solid decision-making.

- Adjustable Actions: Robots can pick up new tricks and adapt to their environment and tasks.

Industries like aerospace, online shopping, and car manufacturing are putting AI-driven robots to work to boost productivity, efficiency, and quality (LinkedIn).

| Industry | How They’re Using AI in Robots |

|---|---|

| Aerospace | Inspecting quality, streamlining assembly |

| E-commerce | Managing stock, sorting orders |

| Automotive | Self-driving cars, precision production |

Human-like Robot Advancements

Robots that act more human and have a knack for delicate tasks and complex thinking are on the rise. They’re built to gel well with people, helping in everything from customer service to medicine.

Standout Human-like Robots:

- Ameca: Famous for its super real facial expressions and smooth moves.

- Sophia: A chatty bot that can engage in deep convo and show emotions (SmithySoft).

These bots are getting better thanks to new sensor tech, language understanding, and actuator design. This progress enables robots to complete jobs with high accuracy and easily match up with humans.

| Robot Name | Cool Feature | Where You’ll Find It |

|---|---|---|

| Ameca | Life-like facial expressions | Shows, public presentations |

| Sophia | Engaging conversational skills | Customer help, health settings |

Wrapping it all up, the mashup of AI and ML with robotics, along with headway in making human-like bots, puts the spotlight on how fresh processor tech is reshaping the future of robots.

Current Challenges in Processor Speed

Before diving into what’s slowing down chips and how to speed them up, there are two main things to tackle: branch prediction and letting instructions run out of order.

Understanding Branch Prediction

Branch prediction is a trick to keep the CPU running smoothly despite the hiccups caused by unexpected detours in code. Imagine you’re driving and suddenly there’s a branch on the road. You either dodge it or hit the brakes—which can mess up the flow (pardon the pun). If the CPU makes the wrong move or “guess,” it might toss out work it has already done and start all over, wasting precious time.

To prevent this, CPUs come with built-in smarty pants, known as branch predictors, to get ahead of these decisions. Using brainy algorithms, they try to anticipate turns before they hit, reducing the times they need to rewind and replay.

| Branch Prediction Method | Accuracy Rate |

|---|---|

| Static Prediction | 50% – 65% |

| Dynamic Prediction | 85% – 98% |

Out-of-Order Execution Techniques

Out-of-order execution is like letting the CPU multitask by cherry-picking what it can deal with first, kinda like eating dessert before finishing all the veggies if no one’s watching. Some tasks depend on others getting done first, like needing to finish digging a hole before you can plant a tree. But sometimes, separate orders can happen together, sidestepping any waiting time.

By running tasks as soon as the tools and inputs are ready, processors make the most of their resources, squeezing more out of the same clock speeds.

| Instruction Execution Mode | Efficiency |

|---|---|

| In-Order Execution | Slowed by waiting games |

| Out-of-Order Execution | Really gets things moving, cuts back on delays |

Cracking these challenges is key in making each new processor generation not just faster, but smarter, turning up the heat even without cranking the clock speed (Super User).